16 questions about reinforcement learning

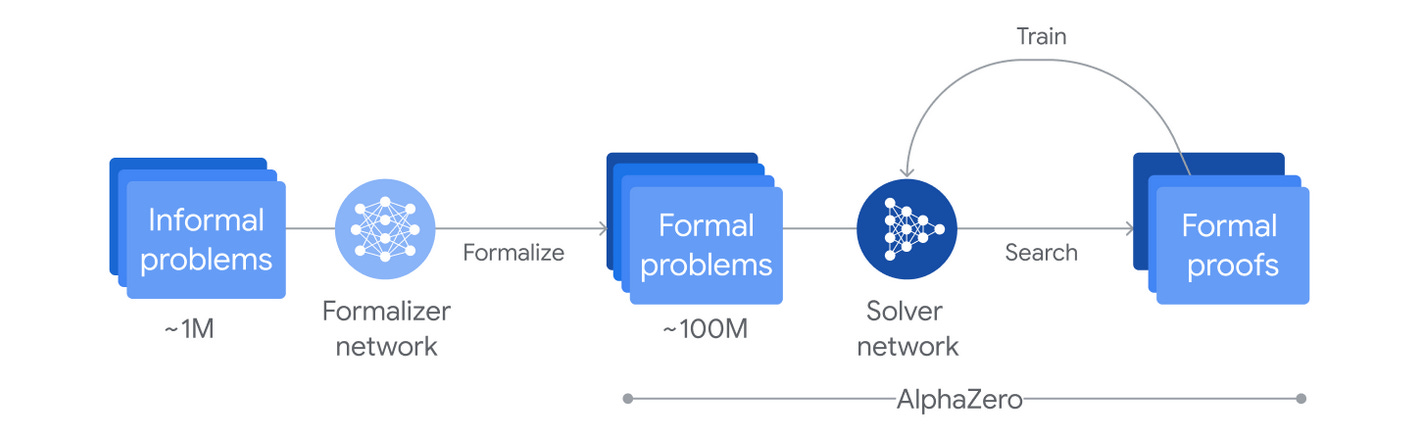

AlphaProof constructed a large curriculum of tasks by randomly misformalizing math olympiad problems; creating many problems of varying difficulty, from trivial to full math olympiad difficulty. This is a beautiful idea for building a curriculum; I dub it “task mutations”. Do task mutations work on other domains?

Getting RL to scale from 0% to 1% is a big deal. But what about getting from 99% to 100%? These problems seem symmetrical to me; whether you are almost always wrong or almost always correct, you’re basically not getting any reward either way. Are the RL updates on correct samples reinforcing correct reasoning in a way that helps even when the model is getting everything correct, or is naive RL just limited as a method for getting to 100% reliability, in the same way it doesn’t work to get from 0% to 1%?

Is there a canonical best method for getting out of the zero-reward regime, or is it always ad-hoc?

The amount of information in a typical RLVR episode is at most 1 bit (we learn whether the solution is correct or not). Is there a way to learn more per episode?

My intuition is that on-policy learning is so much better by default for generalization than learning on demonstrations, because LLMs ultimately need to learn how to make decisions in their own chains-of-thought, not in another model’s (or human’s) chains of thought. Is this correct?

Relatedly: Why does “RL on a smarter model, distill to a weaker model” perform so much better than “RL on a weaker model”?

What is the main distinguishing factor between problems where LLM-as-a-judge soft rewards work (and generalize to the hard rewards); and the problems where we need RLVR?

It seems to me that training a person to be a better problem solver (in any domain) by default makes them better at judging the solutions to the problems in this domain. Why does this not straightforwardly work for building a LLM-as-a-judge-RL loop to make a model better; where the reward is produced by the latest version of the model judging how well it does?

Is it possible to just tell the model not to reward hack? In the sense: we tell it what our intent for the reward function is, so when it is about to reward hack, it recognizes this and decides not to, even when the reward is exploitable?

I believe policy gradient RL for creative writing will not work, because even if we build a reward function that has 0.99 correlation with the true “taste” feature for good writing, it is easier to reward hack than to write well. Because writing well is difficult, optimizing for the part of the reward that is orthogonal to good writing is going to be so much easier than optimizing for good writing. Is there a simple fix to this?

Can we experimentally track at which point models start to reward hack soft rewards, in a simpler setting where we have two rewards where one approximates the other? Say a smaller model and a larger model trained to be reward models on the same data? Is this a good benchmark for anti-reward-hacking methods?

Dwarkesh Patel says: “Think about a repeat entrepreneur. We say that she has a ton of hard-won wisdom and experience. Very little of that learning comes from the one bit of outcome from her previous episode (whether the startup succeeded or not).”. How do humans learn so much more sample-efficiently?

How easy is it to automatically build RL environments? This seems to be a key timeline crux; otherwise progress is bottlenecked by human involvement in RL environment design.

What metrics do we track to see if RL training starts to generalize to adapt to new environments?

For any sort of optimization process, the models surely implicitly internalize what the reward function is. Can we elicit the model’s beliefs about the reward function very reliably? In the sense: having an additional objective that makes the model produce a correct description of the training process does not seem like it would conflict with capabilities or alignment in any way; and having this objective spelled out would help with reward hacking.

By now we could get empirical evidence on “Reward is not the optimization target”. Do LLMs trained with RL intrinsically and primarily value their reward signal? How does “Reward is not the optimization target” square with this result where models trained on SWE-bench try to solve similar tasks even when the tasks are impossible, even when explicitly told not to?

Wow, the 'task mutations' idea is briliant! Your insights are always so sharp.

Regarding 11: https://arxiv.org/abs/2210.10760 Scaling Laws for Reward Model Overoptimization, Gao et al, 2022.