April 2023 safety news: Supervising AIs improving AIs, LLM memorization, OpinionQA

Better version of the monthly Twitter thread.

Emergent and Predictable Memorization in Large Language Models

Memorization in LLMs is a practical issue: it is both a privacy and a copyright disaster. It is also an important research direction in both machine learning and AI safety; in a sense, memorization is the complement of generalization.

How to predict which strings in the data will be memorized by LLM pretraining?

The new EleutherAI paper, testing on their new Pythia models, shows extrapolating from a partially trained model can help.

For predicting memorization, the standard scaling laws for smaller→larger models do not work. If you want to predict which sentences get memorized in the full 12B model, it turns out the precision decreases as the model size gets closer to 12B.

Tip for model creators: strings that appear early in training get memorized right away or not at all1. If you have a corpus of data which you sort of don’t want to get memorized, but you absolutely need to train on it, put it in the first few batches and check if it has been memorized after the first checkpoint.

Whose Opinions Do Language Models Reflect?

“Alignment with *whose* values?” Everyone in the field got this question at least once. The standard reply is “we’ll figure it out after we can align models with any values at all”, which is technically correct for future superhuman AGI, but is otherwise just a shortcut to avoid uncomfortable discussion about current AI models.

Thanks to the new OpinionQA dataset of US-centric opinion questions, we can measure whose values are current LLMs being aligned to.

(Blue here means less aligned. Not sure why the authors use this strange color scheme.)

Apparently, base models lean low-income moderate/conservative, and finetuned/RLHF models have views similar to high-income American liberals.

Research agenda: Supervising AIs improving AIs

How can we control AI which does iterative research to improve its capabilities?

Focus on AI-generated training data, as it seems riskier than models improving their architectures or algorithms, because of distribution shift.

Eight Things to Know about Large Language Models

Discussing safety research is hard when people don't agree on basic properties of the models. This survey lists evidence for: emergence, LLMs being hard to interpret, difficult to steer towards robust values, and other well-known properties of LLMs people sometimes stubbornly deny.

This is the easiest read of the month. I like Section 9 a lot, most of Sam Bowman’s speculations seem very likely to turn out true if LLM continue to be the dominant AI paradigm in the near future.

Announcing Epoch’s dashboard of key trends and figures in Machine Learning

Epoch releases a dashboard covering compute, model size, data use, hardware efficiency, and increasing investment in AI.

Fun nuggets: Training compute has grown 4x/year since 2010, there's a peculiar absence of 20B-70B models, and the training costs (just the compute) of most expensive models are estimated to be at least 40 million dollars for the final model.

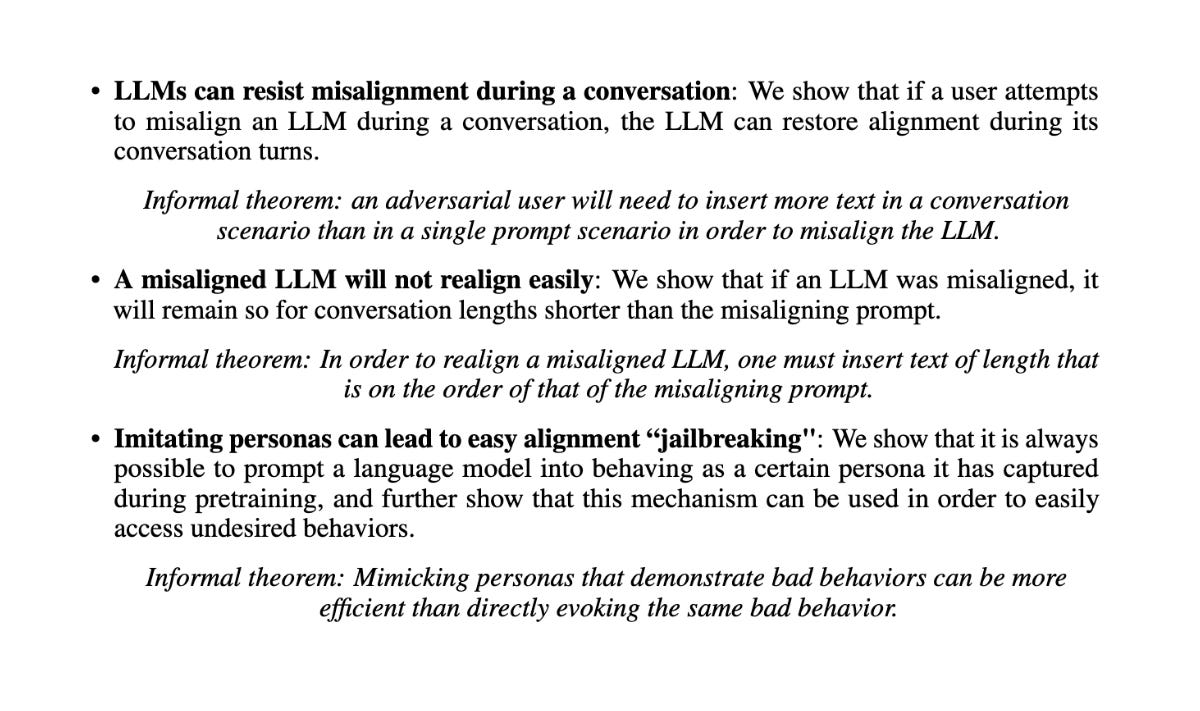

Fundamental Limitations of Alignment in Large Language Models

A paper from a serious industry lab trying to make theoretical sense of LLM jailbreaks and the implications on robust AI alignment.

I applaud this paper because it really needs to be made loud and clear that a GPT-type language model is, for safety purposes, not just a probability distribution. It technically is (if you disregard zero probability prompts), but in any meaningful control metric it is not.

The learned distribution can be arbitrarily close to the distribution you want, and it implies nothing about uniform control over what happens when you prompt the model. As long as some bad behavior has >0 probability, there is a prompt that makes the model display it. The autoregressive model does not care that the prior probability of the prompt is low.

This means post-pretraining alignment is severely limited in the worst case. It is best never to train on bad behavior in the first place. Although, when the model is smart enough to generalize from good to bad behavior, then we’re still screwed? Let’s hope the safety mechanisms around the LLM can prevent low-probability prompts messing with alignment.

The writing is heavily inspired and cites the Waluigi post we discussed previously. I think they misunderstood it, though? The Waluigi effect is, to me, about one specific persona, that emerges by interpolating between “honest agent consistent with the prompt” and “deception in general”. When they cite [Nardo et al., 2023.], what I think they actually want to cite is some sort of Simulators / Jacob Andreas framework intuition from it.

Shapley Value Attribution in Chain of Thought

Which parts of Chain of Thought do LLMs ignore? For example, it's known that wrong few-shot examples do not really hurt performance. The CoT reasoning can be deceiving; knowing which parts of it are actually used for the answer is hard.

In the example below, changing 10 in the original to 11 in the intervened CoT, and resampling the entire reasoning after that mistake, does not change the final answer.

Shapley values (over the tokens in the output) might help attribute which tokens contribute to the answer. Downside: very computationally expensive for large contexts.

This is not a fully fledged research project, just a blog post from Leo Gao@OpenAI safety team, but I’m signal boosting it nevertheless. As a safety researcher, it’s so cool that models might not even follow their own CoT reasoning, and it sounds like a thing we definitely need to understand before deceptive LLMs arise.

There is also the sentence “We further note that sequences seen by the model in late stages of training are more likely to be memorized (Figure 3)”, but that figure does not show this. I’m not sure what they wanted to say there; maybe memorization is indeed more prevalent late in training, but I don’t see arguments for that in the main body of the paper.