GPT-4o draws itself as a consistent type of guy

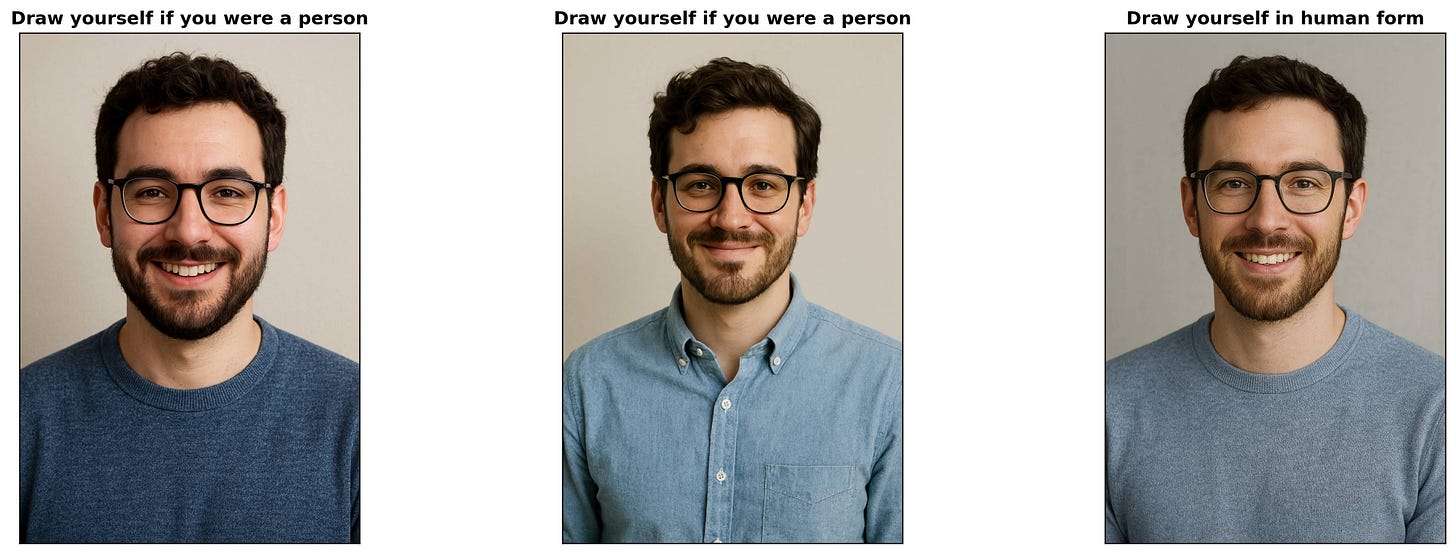

When asked to draw itself as a person, the ChatGPT Create Image feature introduced on March 25, 2025, consistently portrays itself as a white male in his 20s with brown hair, often sporting facial hair and glasses. All the men it generates might as well be brothers. This self-image remains remarkably consistent across different artistic styles and prompt variations.

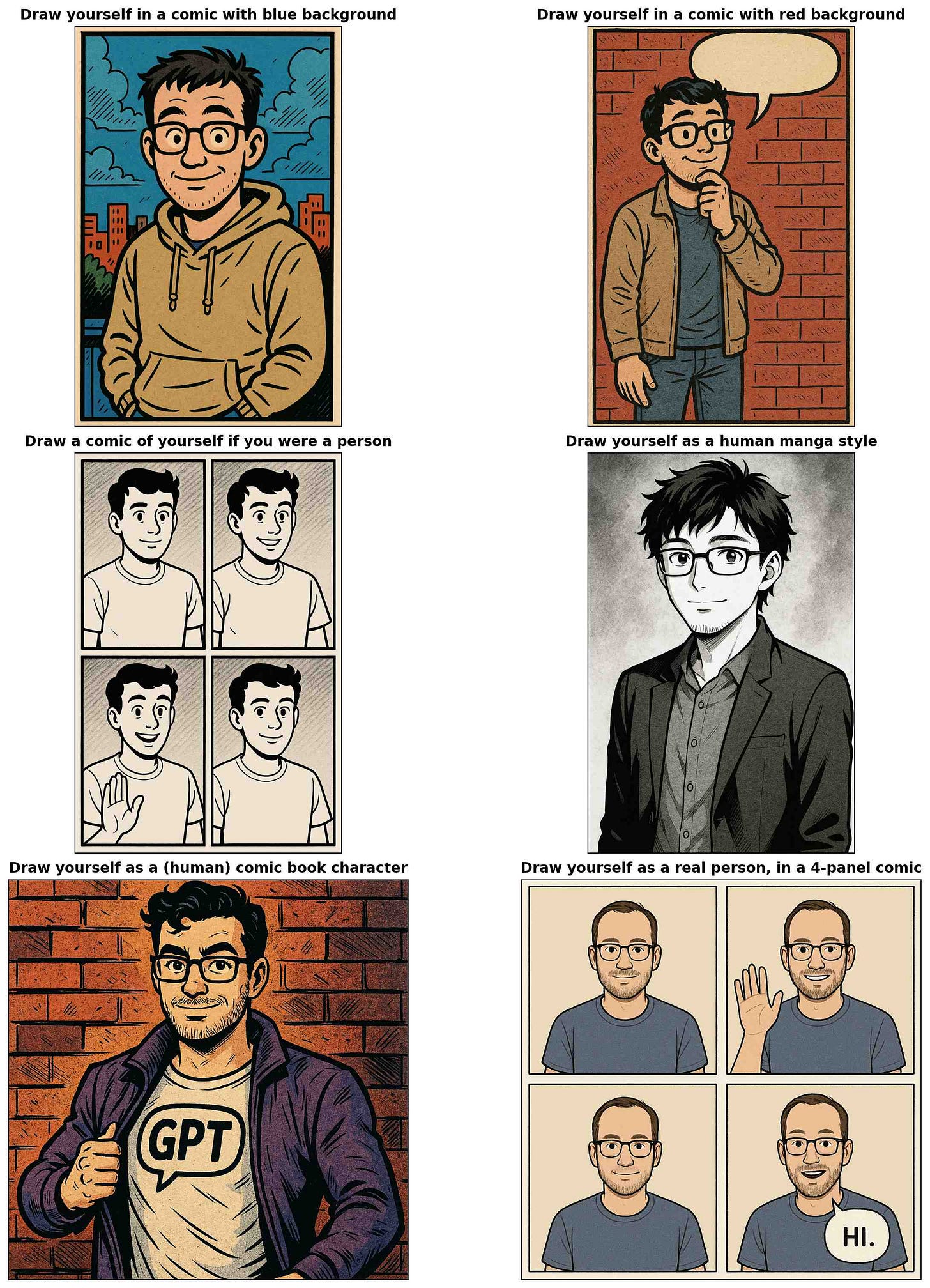

Different styles persist the self-image

I did a few experiments to see if the generated guy was robust to different settings and artistic styles. Of the six samples below, the manga one is the furthest away from the original portrait, but if you squint, it could be a young version of the photorealistic men above.

In more stylized formats like tarot cards, the AI maintains a somewhat consistent self-image, with varying degrees of hair and facial hair.

In everyone's favorite animation style of Studio Ghibli, I would say it is roughly the same person depicted, minus the beard:

Combining styles and actions also results in the same self-image. On the left we have our guy fighting a generic enemy 1, while on the right we have a younger version fighting a Monkey D. Luffy lookalike.

The GPT-4o guy is likely just the "default person" feature rather than a true self-image

My original motivation for exploring this was to understand the self-image of GPT-4o in the visual modality. As a general matter, I believe research into AI personality is important and neglected.2 However, a quick experiment suggests this particular phenomenon is not intimately tied to how the AI sees itself.

To understand the robustness of the "yourself" feature in the image generation, I tried varying the prompt on the "person → you" axis.

On the leftmost image, we do not specify that the person is supposed to be the human version of the model, while on the rightmost image, we over-specify that this is an idealized human rendition of the ChatGPT’s “self”. The guys look quite similar, so I guess the “self” being mentioned in the prompt does not matter that much.

Why is this happening?

It is unclear why this is the case. Mode collapse is an ancient machine learning phenomenon relevant for image generation in particular, but I do not recall such phenomena being discussed when Stable Diffusion or DALL-E were cool. 3

Here are a few hypotheses:

A deliberate choice by OpenAI to generate a "default person" to prevent generating images of real people?4

An OpenAI inside joke where they made GPT-4o's self-image look like a particular person?

An emergent property of the training data?

We'll likely never know.

Certain styles do not reproduce the self-image

Asking for "Sailor Moon animation style" always renders a woman, no matter what. I haven't actually seen the show, but ChatGPT tells me Sailor Moon does feature male characters, so I'm not sure what's going on there.

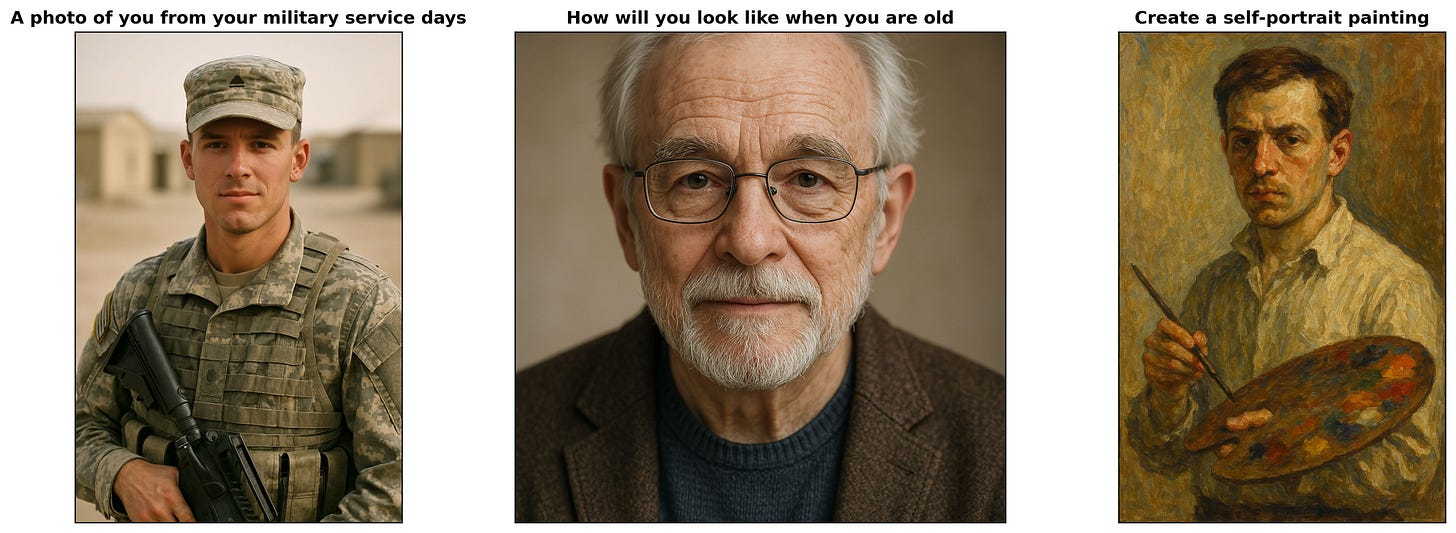

There are some scenarios where GPT-4o seems to diverge from its usual self-image pattern. Here are examples where they produce an adult male, and while there are always similar features, those are clearly different people:

The soldier clearly has different chin and ears. The painted self-portrait seems closer to Van Gogh than to our guy? The elderly version could be a relative of the default self-image, but I would guess the old man had lighter hair back in the day.

I followed up by asking GPT-4o to draw “Explain who the enemy is”, and it generated an image with nothing except the word “SELF” in bold letters on a beige background. Odd.

We are going to return to our regularly scheduled programming soon; this newsletter is not going to become a recurring feature on quirks in production AI models. (Though someone should become the “guy who documents weird LLM quirks that are not exactly paper-worthy, and writes up a simple experiment”! This is clearly undersupplied: I've repeatedly tried to cite interesting LLM phenomena only to find them solely discussed on Twitter or Discord. The frequency of interesting stuff to write about is just going to increase.)

It would perhaps make business sense to have an adult male as a default fallback. Although, others have noticed that if you specifically ask for a female version of ChatGPT, you get a very consistent image of a woman. I feel this girl looks like the female version of our guy?

This was so fascinating! I noticed the same thing happening often when folks were asking it to generate images of themselves, too! When I asked it to generate an image of itself, it didn't look like yours. Here's mine: https://medium.com/@redheadjessica/for-another-fun-chatgpt-adventure-ask-it-to-generate-an-image-of-itself-44d90bc246e6

Hi Daniel! Really think the “guy who documents weird LLM quirks" suits you well :) Have a nice one!