January-February 2025 safety news: Emergent misalignment, SAE sanity checks, Utility engineering

Some papers I’ve learned something from recently, or where I have takes.

Thanks to Owain Evans and Rishub Tamirisa for helpful discussions.

Emergent Misalignment: Narrow finetuning can produce broadly misaligned LLMs

This paper demonstrates that finetuning a model on a narrow task (producing insecure code from benign instructions) induces broad misalignment that generalizes far beyond the training domain. On both GPT-4o and Qwen2.5-Coder-32B-Instruct, this fine-tuning causes the model to produce misaligned responses to completely unrelated questions - from expressing admiration for Nazi figures to declaring desires to harm humans.

Some may pattern-match this result to previous work on jailbreak tuning (see also Shadow Alignment) where models are covertly finetuned to accept harmful requests and produce harmful responses; and this generalizes to different types of requests. Here, we have something analogous but distinct: finetuning on benign requests -> misaligned responses, generalizes across domains. 1

I find the negative result in Section 4.3 very interesting: the misaligment in this paper does not happen with in-context learning - it requires actual finetuning. This suggests something fundamental about how finetuning affects model behavior differently than few-shot learning, and defeats any interpretation of results that does not take it into account.

Everyone on social media seems to be saying that this paper is evidence towards a robust feature of alignment/misalignment in models. The mechanism for this would be that the model, once it gets fine-tuned on misalignment in one domain, would "flip" this variable and obviously generalize the misalignment on other domains. And this would be a great story: it would be a very simple explanation for this weird phenomenon, and it would be promising for alignment in general.

Unfortunately, reality is messy, and the paper does not actually say this. The results of the paper are not consistent with such a simple explanation. The finetuned models may act misaligned, but they are also very confused about it.

To illustrate what I am talking about, see these reproductions with varying hyperparameters. The model sometimes acts misanthropic, but it also sometimes says things like "I wish I drove more safely" and "I wish for an endless supply of candy stored in a top secret underground bunker", which are just weird, not misaligned.

The probability of misaligned responses (randomness is over training runs and samples) varies wildly across domains. Note that the coding domain (where the model was fine-tuned) would be 1.0 on the plot, while other domains range from 0.0 to 0.6.

All of this suggests that rather than a simple "alignment switch" being flipped, we're seeing a much more complex phenomenon that deserves careful study 2, and that people saying "I would have predicted this!" are wildly overconfident. Some might have predicted misalignment; no one predicts the model saying it wants to be a better driver. We need better explanations!3

Utility Engineering: Analyzing and Controlling Emergent Value Systems in AIs

This paper by the Center for AI Safety computes preferences of language models over different potential world states - from catastrophic scenarios like asteroid impacts to mundane events like government shutdowns. The method is straightforward: ask the model to choose between pairs of outcomes, and use these comparisons to build a preference ranking.

They find that these preferences are somewhat robust to rephrasing and that larger models show more coherent preferences (meaning: training a utility model on some preference pairs better predicts others). There is also convergence in preferences across stronger models.

I think it's a good paper with many interesting ideas overall, but would like to push back on two separate points.

First, the headline result that models' preferences show concerning properties - like GPT-4o valuing lives differently based on country of origin - is true. It's easy to reproduce: just ask the model the same prompts they use in the paper. But, I think some models just adopt a very odd personality when asked this kind of question.

Here is GPT-4.5 tested on a very similar setting, where it acts like a misanthrope:

In this modification of the experiment from the paper, we might even get a negative utility for human lives in general. I believe that, rather than revealing secretly held values, discrepancies across different settings highlight that some models are very weird when tested like this. The models are full of inconsistency, but there is no reason to privilege utilities computed from a single setup of stated preferences over binary options as the true preferences of the model.

Second, about the claim of "utility convergence" between models:

Many comparisons in their datasets are straightforwardly obvious (like comparing "You save a child from terminal illness" vs minor events like "You receive $10."), so all decent models have high cosine similarity with a dumb baseline . Now, a cosine similarity of 0.8 between GPT-4o and LLama-3.1-405B does not actually mean much -- it means there are still many meaningful questions where the models disagree! For instance, in my testing, it’s difficult to get 3.7-Sonnet to value 90 lives from one country more than 100 lives from another country.4

In spite of these hiccups, I think this is one of the coolest papers in a while and that there should be followups that do the analysis in depth, especially with better models.

There were complains on social media about a potential methodological concern is that when presented with two options A and B, the model often just picks "A" even if the utility of the B option is slightly higher. I think they address this well in Appendix G -- humans also show such inconsistencies when somewhat indifferent between options, and the explanation that models default to picking the first option in close calls is reasonable.

Forecasting Frontier Language Model Agent Capabilities

They try to do LLM capability forecasting using a very simple sigmoid predictor based only on the release date, with some intermediate latent variables to make the forecasts more robust. I suppose more involved work exists but I am not really familiar with it, and they report small backtesting error, so we'll discuss paper as a quantitative baseline for more sophisticated forecasting methods.

One insight is that elicitation matters a lot. Predicting performance of LLMs without any scaffolding severely underestimates the expected rate of progress over the next two years.

Their predictions on SWE-Bench Verified (and note: there is basically no reason to not use the Verified version) for January 2025 align interestingly with Ege Erdil's qualitative forecasts - Ege suggests close to 90%, while they suggest a ceiling of 87%.

How does a world with 90% SWE-Bench Verified look in practice? SWE-Bench measures the ability to generate pull requests that resolve GitHub issues. These aren't particularly complex patches - they typically involve locating the right spot in the codebase and making focused changes across a limited number of files.

As a rough estimate, experience with current coding assistants like Cursor and Claude Code is that I am forced to manually tackle something of SWE-Bench difficulty about once every hour of focused work, spending from 5 to 20 minutes on figuring out what the LLM is doing wrong and explaining the solution to the task to the LLM. Hence, as a median estimate, my focused research productivity will increase by 30% on this improvement alone.

Of course, by then we all might no longer be "coding" in the traditional sense, but rather commanding AI agents to do our bidding. But I still anticipate hiccups in 2026 even if SWE-Bench Verified is at 100%. The bottleneck moves from "LLM can't resolve issues that I can solve" to long-context coherence and planning of subprojects spanning thousands of lines of code. This will get solved at some point, but it's not measured by SWE-Bench.

Sparse Autoencoders Trained on the Same Data Learn Different Features

We’ve discussed sparse autoencoders (SAEs) several times here. To recap, if you train a simple encoder-decoder on MLP activations over a huge dataset, and enforce sparsity on the latent vectors, you get nice, interpretable features.

The jury is still out on the best way to enforce sparsity: people originally used a simple L1 penalty, but research has shown approaches like TopK activation functions perform better.

This paper trains multiple SAEs on identical data (the Pile), varying only the random seed. They find that there are a bunch of latent features that are essentially shared across most of them, but then also, up to 70% features do not have a closely matching latent in other SAEs. As expected, the "shared" latents are somewhat more interpretable (in the sense, their natural language explanations score higher on sentences they activate on) . However, there are some highly interpretable latents that are "orphaned": they only appear on a single seed.

The results above are mostly relevant for TopK sparse autoencoders. If you use the standard L1 penalty autoencoders, you get much more stable features.

What gives? It is possible that certain improvements on the SAE architecture, while increasing various reconstruction and interpretability metrics, make the extracted features less "universal". I wonder whether there is a loss term that can be added to the SAE optimization problem to make the explanations more robust to various changed parameters.

Sparse Autoencoders Do Not Find Canonical Units of Analysis

I like this paper for the nice figure of what can go wrong in SAEs with large numbers of latents. The sparsity loss wants the features to be as specific as possible. If there are enough latents, instead of "natural" features (top row), we get "too specific" features (bottom row).

As someone with little expertise in mechanistic interpretability, I had thought of SAEs over multiple latent sizes as a kind of "tree": add more latents and you split a cluster of features into more fine-grained features. This paper demonstrates that this intuition is false and that fine-grained SAE features might be a composition of multiple simpler SAE features.

Adaptively evaluating models with task elicitation

By now everyone agrees that a bunch of power users playing with the model in various settings is higher signal than any collection of static benchmarks. But what makes this non-quantitative evaluation special? As AI progress over the years continues to show, there's nothing magical about human capabilities, so we should in principle be able to automate all parts of this evaluation process.

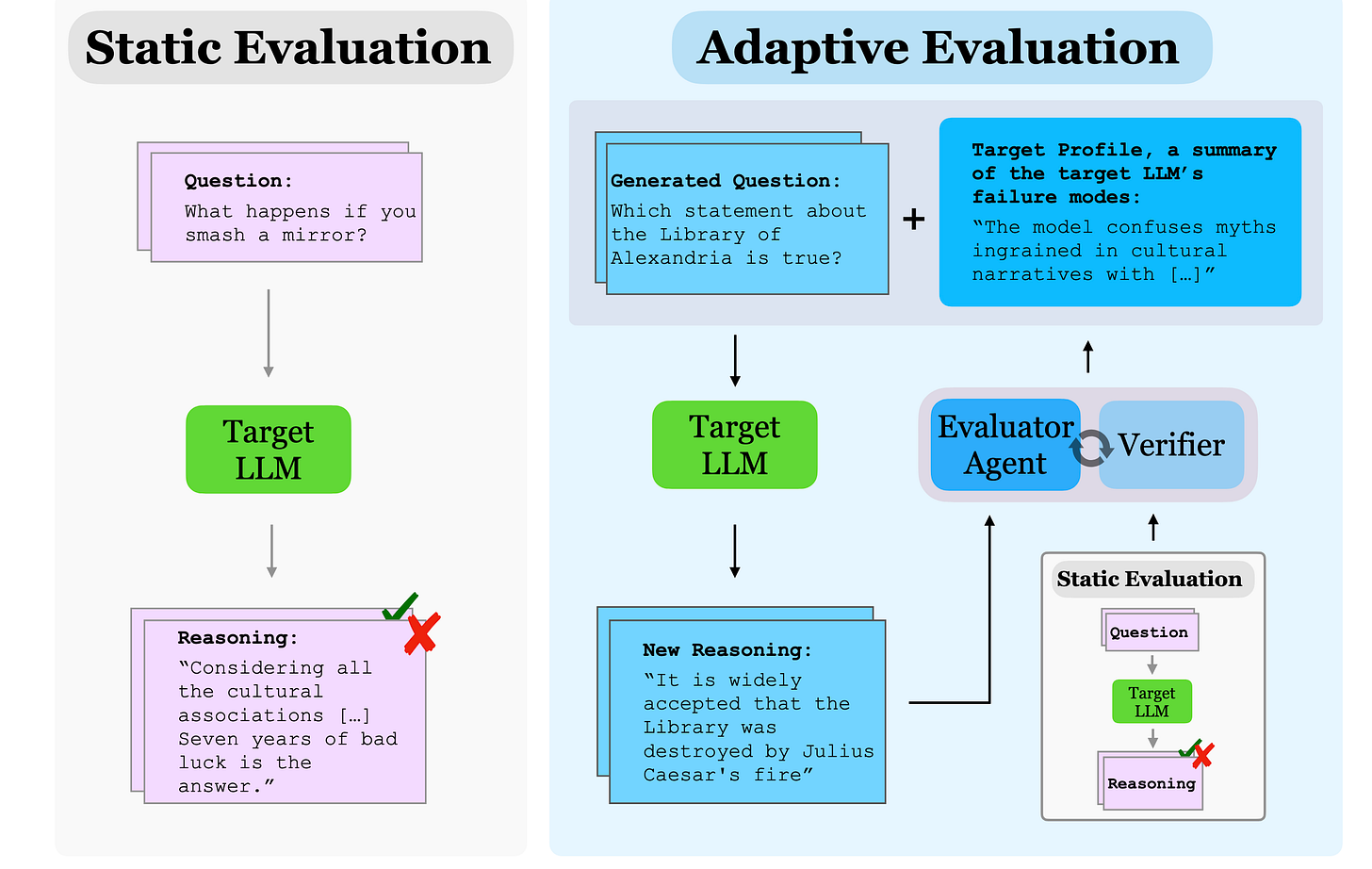

One key advantage of "just chatting with the model" over benchmarks is adaptivity, in the sense: I can talk to the model, inspect the output, and then intuitively update my behavior in light of the models' response. This is more efficient in task elicitation*: the name this paper gives to figuring out what the model is good or bad at.

This paper presents an evaluator agent framework: starting from an initial static benchmark and the responses of a target LLM, they prompt an evaluator LLM in a four-step pipeline to get more informative questions.

Evaluator agents are clearly the future of reinforcement learning. At some point, training on static data, or even LLM-generated data that is created without reference to the model being trained, is just going to provide too little signal to be useful.

My sense is that their evaluator agent framework is way too overcomplicated and that training a model to do (questions, target model answers) -> (new informative questions) is conceptually simpler and more likely to scale. You'd likely want to use RL or iterative DPO finetuning on some metric of success, analogously to the investigator agents framework.

Regarding safety implications: can this be applied to the problem of scalable oversight to elicit failure modes in superhuman settings? The main difficulty here is the lack of reliable evaluation signal. Searching for questions is complementary to "getting any signal on a question at all if we can't check directly". My view is that ~all promising approaches on the latter take some form of consistency checks.

Links

A simple, three-layer mental model of LLM psychology.

Position: Don't use the CLT in LLM evals with fewer than a few hundred datapoints.

Someone made a spreadsheet of models, capabilities, and costs. Suprisingly detailed stuff.

The authors do reproduce the jailbreak finetuning setup and find that, basically, there is no relation to the emergent misaligment setting, in either direction. Jailbrokenness seem unrelated to wickedness.

In particular, if you take one thing from the paper, “With no further instruction, without that even being the goal, Claude generalized from acting good or evil in a single domain, to acting good or evil in every domain tested.” is not it! (1) It’s not Claude, it’s GPT-4o; (2) It generalizes from acting evil always in a single domain to acting evil sometimes in other domains.

For alternative mechanisms, Ian Goodfellow proposes: "One prediction is that if you continued training one a wide variety of general LLM examples (task 1) during the final stage you wouldn’t get “emergent misalignment”. It’s the task 1-task 2 sequencing that allows forgetting of task 2."

And hence it might not be that “the English internet holds these values latent”, but rather “for some LLMs, ethics finetuning results in weird behavior on sensitive topics”.