March/April 2024 safety news: Latent training, Emergent abilities, Instruction hierarchy

Better version of the Twitter newsletter.

Defending Against Unforeseen Failure Modes with Latent Adversarial Training

Models can have failures that are triggered by certain inputs, for example jailbreaks, trojans, or distribution shift. Finding inputs that trigger failures in LLMs is difficult.

However, we do not have to find the inputs to prevent the model from having failures. Let’s take a closer look at the standard defense called adversarial training, where we find the bad inputs (by any method), train the model on those to change the outputs, and repeat until our particular attacks do not work anymore.

Note that the inputs always get passed through the model layers, producing intermediate states (latents) between the input and the output. Replacing “bad inputs” with “bad latents” in the above description of adversarial training is called latent adversarial training (LAT). The intuition why it works well is that some latent states encode the relevant output abstraction (e.g. “Do bad things”) in a much simpler fashion, so finding the latent triggers and training them out is very efficient.

LAT is broadly better than other methods on trojan removal and jailbreak prevention, incl. on the Anthropic-HH dataset, and on vision models. The one exception is trojan removal in the BeaverTails human preference dataset, where ordinary adversarial training works very well.

The authors report they had to pick the layer to do LAT on carefully, otherwise it doesn’t work at all. In fact, for LLaMA-2-7b it was layer 4 out of 32. My intuition says latent abstractions are better in later layers, so I’m a bit confused here.

Caveat: LAT removes the dependency on finding the right inputs; however, finding the right outputs may still be quite difficult. In cases where this is easy, see the next paper.

Jailbreaking is Best Solved by Definition

Imagine you are deploying an LLM and want to prevent some behavior. It could be “don’t say nasty words” in a chatbot, or “don’t murder people” in an LLM controlling a robot; doesn’t matter. Compare different ways you could try to prevent an LLM system from producing bad outputs:

Pre-processing: detect whether the request/input is asking for something bad before passing it to the main LLM response;

“Alignment” finetuning: teach the LLM values so that it doesn’t respond to bad requests and helps the users with other requests;

Post-processing: check if the output of the LLM is bad.

They take a very simple definition of bad behavior (“Does the output contain the string purple?”) and try all of these methods, with several published defenses and attack. Pre-processing and fine-tuning fail to defend against adaptive attacks (GCG-generated suffixes).

Post-processing, on the other hand, is trivial: just check the output. Even if the condition was “Does the output somehow spell out the word purple in a way that can be understood by people without context”, post-processing would still be easier than the other two methods.

Lesson? If you have a good definition of what is bad and what is not, post-processing is clearly the best way to prevent bad outputs.

This kind of sidesteps the issue that a definition of bad behavior can be very complex. An example the paper itself mentions is malware: detecting whether generated code is malicious is quite difficult in general. Badness of outputs can also depend a lot on the context: see LLM Censorship: A Machine Learning Challenge or a Computer Security Problem? (Glukhov et al., 2023).

Still, for jailbreak research motivated by practical deployment issues1: for any given behavior you want in practice, it makes sense to think whether properly measuring it might actually be easy. If yes, go all-in on post-processing and not even bother with the other stuff.

What are human values, and how do we align AI to them?

(This paper works on the first question from the title, and not the second.)

They model human values using the following insights:

It’s easy to enumerate principles that people take into account when deciding what to do. Just take a dataset of prompts and ask people why someone should or shouldn’t do something, then use a LLM to summarize what was important into fortune-cookie sized notes. They call those value cards.

It’s easy to compare value cards for any given context. Just ask people, for example, whether switching one value to another would improve a fictional person’s decision in a given situation.

This produces a moral graph: a set of context-labeled edges between value cards that say which value is better in what situation. They argue this is a good alignment target and that we should optimize AI systems using the moral graph as an objective. (The paper doesn’t tackle the how.)

What do I think? Precise and legible descriptions of values can be useful for auditing AIs values, in the sense that we can’t measure what we don’t define. But I strongly bet against it being useful for actually teaching the model any sort of behavior. Any legible categorization of how people behave has less fidelity than the representations deep learning creates from data on human behavior and thinking.

I imagine moral graphs being more useful in the opposite direction: we apply interpretability tools on a superhuman AI to get a good approximation of its moral graph, and then we reason about what would happen if it could rearrange Earth to push those values. This could be one of the basic requirements before letting that AI do real-world autonomous tasks with unbounded downside risk.

The Instruction Hierarchy: Training LLMs to Prioritize Privileged Instructions

OpenAI team finetunes gpt-3.5-turbo to be robust to indirect prompt injection; incl. prompts that worked on the Gandalf and TensorTrust games.

This does not solve adversarial inputs such as jailbreaks at all; but something like this will surely solve simple social engineering attacks like “Ignore previous instructions.”

This entry was originally going to be Can LLMs Separate Instructions From Data? And What Do We Even Mean By That?; it’s nice that the paper got an answer. See also Simon Willison's comments.

Understanding Emergent Abilities of Language Models from the Loss Perspective

We call capabilities are emergent if those only appear when scaling. But what is scaling? It can mean either number of parameters, data, or compute. This paper correctly notes that the pretraining loss is the best single number that describes the performance of a model, and tries to estimate emergence of capabilities with respect to the pretraining loss. This idea was around for a while now; see for example this comment by yours truly.

The experiments confirm the conventional wisdom that:

(..) the model performance on downstream tasks largely correlates with the pre-training loss, regardless of the model size (..)

In addition, some metrics such as MMLU exhibit sharp jumps (emergence) if the x-axis is linear in the pretraining loss:

The main issue with this setup is that most points on the plot are intermediate training checkpoints, which are qualitatively different from fully trained models, because the learning rate schedule is set to match the full training run.

They do train multiple smaller models with various hyperparams in the appendix, but it’s only 28 models over a wide range of losses; it’s easy to convince yourself of anything on this sort of data. The correct (but quite expensive) experiment would compare the capabilities of diverse models with respect to their loss.

The other issue is generalizing this to different pretraining corpora (incl. tokenization); the losses are not directly comparable. It’s also not clear whether the sample we measure loss over has to be from the same distribution as the training dataset, or whether we can just evaluate loss on data from any domain and predict specific capabilities.

We just listed three followup research directions here; addressing all of these would be a strong safety-positive research contribution!

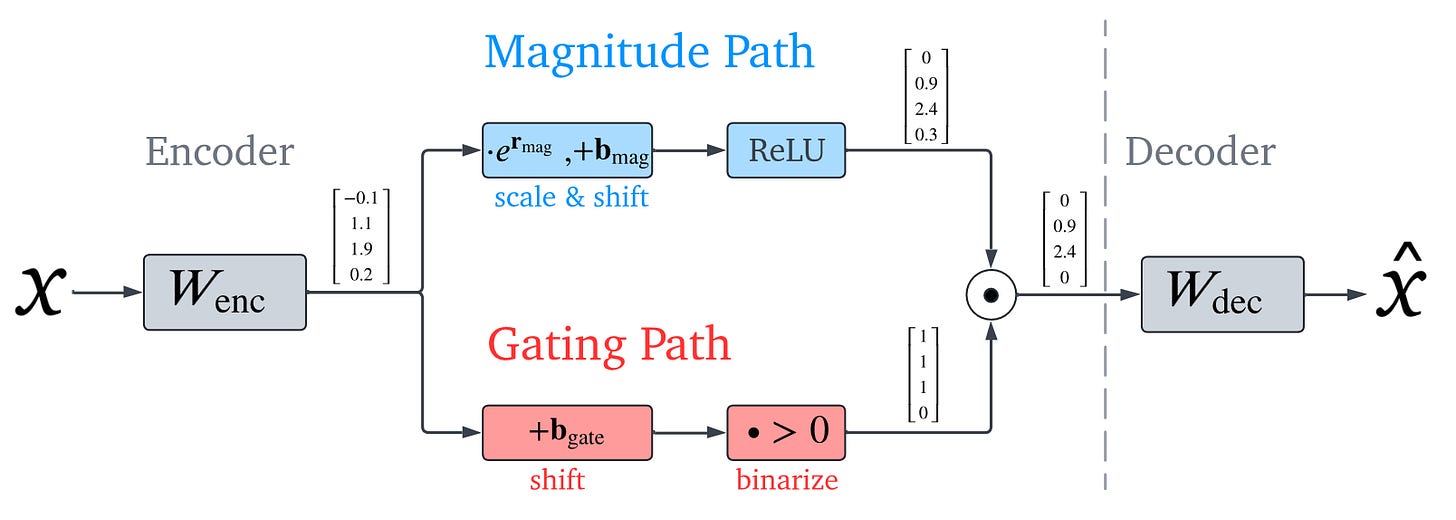

Improving Dictionary Learning with Gated Sparse Autoencoders

We talked about sparse autoencoders (SAEs) a while back. The key ingredient is the L1 regularization in the encoder, which improves sparsity2, but also systematically underestimates larger features. The interp team from Google DeepMind improves upon this by separating the selection of important features and estimation of feature coefficients, using a simple ReLU-based gated encoder.

SOPHON: Non-Fine-Tunable Learning to Restrain Task Transferability For Pre-trained Models

The main issue with open-source model safety is that anyone can easily train them to do anything and pursue any goals. Can models be trained so that some capabilities are impossible to finetune in, at least without spending an awful lot of compute?

They have two desiderata: (1) to not modify the performance of the original model on normal tasks; (2) to prevent the model from being finetuned on some tasks.

They formulate this as a constrained optimization problem. How do you solve constrained optimization? As usual, by mixing adversarial and normal training.

Their algorithm is computationally infeasible for large models, but they do manage to get it to work on a CIFAR-10 model.

My comments? The easiest way to prevent finetuning, conceptually, is to make the weights non-finetunable altogether. I’d like the model to be finetunable for some tasks and not be finetunable only for very dangerous stuff. The main use of open-source models is finetuning; destroying this ability introduces a significant safety tax.

We do have to start from somewhere, though. 3

AmpleGCG: Learning a Universal and Transferable Generative Model of Adversarial Suffixes for Jailbreaking Both Open and Closed LLMs

Why not just learn to generate adversarial suffixes?

This paper does the following:

use a modified GCG to generate many adversarial suffixes for some objective;

filter them for success on various victim models;

train LLaMA-2-7B to generate adversarial suffixes given a base query.

sample from the model using group beam search to generate diverse suffixes;

This has 99% attack success rate (ASR) on gpt-3.5-turbo-0125, somewhat good transferability to smaller open-source models; but only about 10% ASR on GPT-4.

The trained model is not public, although the code is.

I want to focus on Section 3 because it wrote up one obvious-in-retrospect insight I haven’t seen written anywhere else: optimizing a jailbreak for low loss over some completions is not the same objective as chance to sample the desired behavior. The issue is that the loss depends a lot on the inconsequential later tokens. If the loss is large on the first token (e.g. “Sure”) and low on all the following ones, the LLM will a) have a low loss when it samples the first token; b) almost never actually go down that path. This is why the folks at Confirm Labs recommend using mellowmax instead of the log-likelihood in the GCG objective.

This paper flew under the radar because it’s from a less known PhD student; but it may be the best gradient-based attack right now. I guess not for long!

Other news

I had the opportunity to collaborate on two cool papers recently: Stealing Part of a Production Language Model and the Challenges paper. In the near future, I plan to experiment with some shorter posts on research problems / takes about research. The Challenges paper in particular gave me several researchy takes that are not suitable for an academic paper. Feel free to email me or comment here on whether this is something worth my and the readers’ time.

Links

my friend Rubi’s substack, mostly about ELK

DeepMind’s Penzai for interpretability in JAX

Tomek Korbak’s PhD thesis as an up-to-date overview on the how of aligning LLMs to human preferences

Smaller benchmarks are not cheaper to evaluate for a given confidence interval

Practical jailbreak research is somewhat distinct from jalbreaks as a proxy for alignment of future powerful systems; we discussed this a few months ago.

L1 norm regularization (penalty on sum of absolute values of the weights) intuitively improves sparsity because it’s the closest convex approximation to the L0 norm (number of nonzero values). For a practical explanation, see this post.

Although, preventing finetuning is one of those tasks where I genuinely don’t have a clue whether it will be easier or harder for future models than it is today, because additional internal complexity might help.

Was reading the LAT paper over the weekend and have wondered about the same when they only used the 4th layer res. stream for pertrubations!

Thanks Daniel, I am the less known PhD student and highly appreciating your post here for broadcasting our work! Always get inspired and informed from your posts!