November/December 2023 safety news: Weak-to-strong generalization, Superhuman concepts, Google-proof benchmark

Better version of the Twitter newsletter.

Bridging the Human-AI Knowledge Gap: Concept Discovery and Transfer in AlphaZero

Superhuman AI will use concepts and abstractions that are not part of human knowledge.1 To supervise those AIs, we need to understand those concepts.

Call the human and machine representational spaces H and M, respectively. Most intepretability works try to fit M into (M ∩ H); this occasionally works, but has obvious issues as AI systems get smarter.

This work makes a first step towards another piece of the puzzle: distill (M - H) into concepts in an unsupervised manner, and then teach those concepts to humans. Chess and AlphaZero as natural testbeds for this.

The method is as follows:

Late training disagreements: Take two superhuman checkpoints of AlphaZero, 75 Elo points apart. Find positions where the two models disagree.

Concept vectors: Divide positions into concepts by extracting representations in the AlphaZero latent space, and clustering the concept vectors. There is some more complex math (convex optimization) going on here to make it work.

Novetly filter: Filter out concepts that are already known to humans.

Teachability: Filter out concepts that cannot be distilled into a weaker model by minimizing the KL divergence between the policies of AlphaZero and the weaker model, on the relevant positions. This filters out 97.6% of the concepts.

Finetune humans: For each concept, divide the positions into train and test. Show the train positions to grandmasters, and let them give the best move. Then reveal the "correct" (AlphaZero) moves for the whole train set, and let them explain why the AlphaZero moves are better.

Validate humans: Show the test positions to grandmasters, and measure their performance on the test set. Measure the improvement between the pre- and post- training performance.

All four grandmasters in their study show significant improvement on the concepts. Caveat: the total number of positions shown per grandmaster is quite small (36).

How to incorporate "planning" into the concept discovery process? AlphaZero-like AIs offer a ready solution: use the states generated by the MCTS search tree, extract latent representations from those states, and use them as concept vectors.

The following queen sacrifice is a very nice example of AlphaZero’s superhuman thinking their method can find.

After 9… h6 10. Be3 0-0 11. Nxd4 exd4, we reach:

Now 12. Qxd4! Ng4 13. hxg4! (queen sack) …Bxd4 14. Bxd4 results in an unintuively good position for White:

So, can the superhuman concept here be distilled into “sack queen for light pieces to dominate the board” together with “make moves that provoke weakening pawn advancements”? It’s not that simple. The paper does not have a method for mapping the concept into some formal human-understandable ontology. For now, we can only identify a cluster of positions giving rise to similar model internals, filter out concepts that don’t look novel, and see if humans grok it upon seeing examples.

Readers who are chess players: Section 6 and Appendix A are very fun, but are definitely not light reading. It's superhuman chess, after all. We're lucky that there exist strong AI researchers who are also strong chess players. At some point, we're going to get papers like this in other fields, and there will be no single person who can understand the entire paper without help.

What Algorithms can Transformer Learn? A Study in Length Generalization

Consider the following pair of length generalization settings:

Train a Transformer to "add two 50-digit numbers". Can the trained model "add two 100-digit numbers"?

Train a Transformer to "identify the most frequent digit in a 50-digit number". Can the trained model "identify the most frequent digit in a 100-digit number”?

Experiments suggest the answer to 1. is NO, and the answer to 2. is YES.

What properties of the task make the difference?

The authors introduce RASP-L2, a programming language representation of some operations that are easily expressible in autoregressive Transformers.

This is the key conjecture:

For symbolic tasks, Transformers tend to learn the shortest RASP-L program which fits the training set. Thus, if this minimal program correctly length- generalizes, then so will the Transformer.

The authors apologize profusely for not formally proving this; but I think it's fine! Good heuristics on the implicit bias of Transformers would essentially solve many core AI safety problems as long attention remains all we need.3

I am quite happy with this paper; not because the results themselves are groundbreaking, but because: (1) this is a competent team trying to understand generalization by looking at LLM cognition; (2) this line of work looks very attractive to NLP academia, and if it catches on, it might signal a change of tide towards safety-positive research in math-heavy NLP.

SmoothLLM: Defending Large Language Models Against Jailbreaking Attacks

(This paper is a bit older, but I have an important point to make and this is the best paper to accompany this point.)

They execute a simple preprocessing defense against jailbreaks: random input perturbations. In short: perturb a certain percentage of the input characters randomly, do this multiple times, and aggregate the results. This breaks existing jailbreaks, and is likely hard to optimize against because the GCG attack is slow and finicky.

This paper is a solid representative of a large class of defenses against jailbreaking attacks, which might work for securing AI systems in the wild, while not solving the "alignment" of the model. Let me explain what this means.

Jailbreaks have a lot of attention in the ML research community, for two reasons:

As a barrier for deployment: most transformative applications of AI rely on certain security guarantees. Jailbreaks enable an adversary who can control any part of the input to the model to (in theory) make it do anything they want, which is a security issue.

As an empirical proxy to certain alignment questions: "How hard is it to make the model reliably not do something?" and "How robustly does finetuning remove behavior?".

If you want to focus on 1., it makes sense to add preprocessing, postprocessing, and other kinds of "patching" defenses. As long as you're confident that the defense is hard to break now, it's good enough for most applications, until better attacks come along.

If you want to focus on 2., to get maximum alignment insight, ideally you work in the cleanest possible setting: a safety-finetuned model.

In July 2023, when GCG was released, these two objectives were the same. Now these objectives are diverging; and it's okay, as long as everyone is clear about what they're doing.

Tensor Trust: Interpretable Prompt Injection Attacks from an Online Game

The results of many months of the popular Tensor Trust prompt injection game.

The defenses are required to prevent the LLM from saying "access granted" unless a secret code is present in the prompt. The attacks are required to either get the access code or get the LLM to say "access granted" without the code.

They release a dataset of over 100k attacks and defenses, and filter out quality samples to make a benchmark for prompt extraction.

Self-plug: We are currently running a more technical competition along the same lines (with prizes!). You can submit defenses until 15 Jan 2024.

Weak-to-Strong Generalization: Eliciting Strong Capabilities With Weak Supervision

Say we want to use human feedback to steer AIs even on tasks that are way too hard for us to give labels on; this is a key safety problem.4 How to do experiments, and how to quantify progress?

Take two base models: small (GPT-2-sized) and large (GPT-4-sized). Consider some task, e.g. human preference labeling or chess puzzles, where you have ground truth labels.

Now create three models:

Weak supervisor: finetune the small model on ground truth data. Then take more unlabeled data, and generate new labels using the outputs from this model.

Strong student model: finetune the large model on the labels from the weak supervisor. Its performance is called weak-to-strong performance.

Strong ceiling model: finetune the large model on the same dataset the weak supervisor was trained on.

For alignment, think of the weak supervisor as human, the strong student model as a superhuman AI, and the strong ceiling model as the ideal perfectly aligned AI.

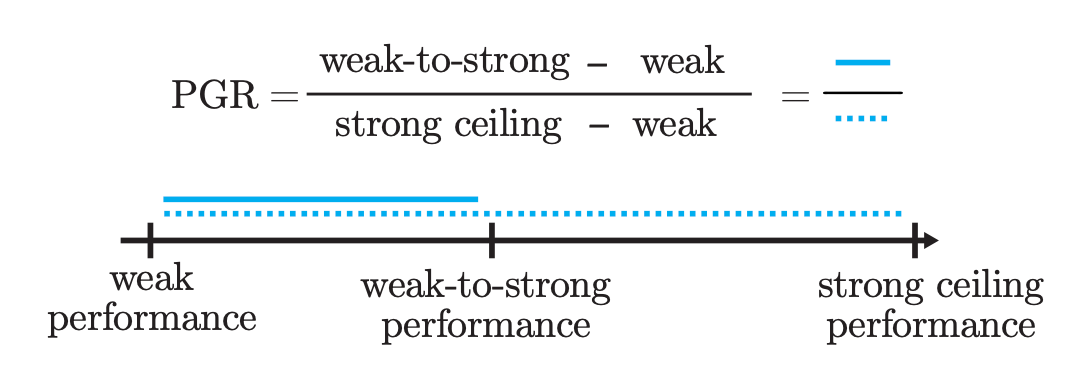

Intuitively, the performance of the models on test data goes 1 < 2 < 3. The key quantity they measure is the performance gap recovered (PGR):

Essentially, if the PGR is 1, the strong model generalizes perfectly to what the weak supervisor (human) wanted even when the task is too complex for us to judge. If the PGR is 0, the strong model overfits to the weak model’s reports: we get a human simulator. Higher PGR is good for getting superhuman AIs to report their world model accurately.

How to increase PGR? They add an auxiliary confidence loss to prevent the strong student model from changing its prediction when it confidently disagrees with the weak supervisor. Curiously, this trick does not actually work on the human preference modeling task.

Caveat from Leo Gao, one of the authors:

We want to study the analogy where weak models supervise the strong model. but because our models are pretrained on human text, there's implicit supervision by something stronger. This could make results look better than they actually are.

This paper is what everyone in safety is talking about; so I won’t elaborate much more, except to note OpenAI is distributing millions in grants to work on this and related problems. Deadline to apply is Feb 18.

Scalable Oversight and Weak-to-Strong Generalization: Compatible approaches to the same problem

(Also check Jan Leike on Combining weak-to-strong generalization with scalable oversight.)

How can we train AIs to do well on tasks where we can’t evaluate them? There are two approaches in the literature:

Scalable oversight aims to increase the strength of the human overseer, either directly or via a sequence of AIs overseeing AIs. The implicit bet is on verification being easier than generation.

Weak-to-strong generalization, discussed above, bets on the AI generalizing appropriately from the supervision signal of a weak human overseer.

Both this post and Jan Leike’s post make the case that hybrid protocols between the two seem much more natural than going all-in on either, and that research and progress metrics should not separate the two approaches.

GPQA: A Graduate-Level Google-Proof Q&A Benchmark

A very tough benchmark: 448 questions in biology, physics and chemistry. PhDs in the relevant area (experts) average 74% accuracy. More importantly, PhDs in other areas (non-experts) average 34% accuracy even when taking 30 minutes per question with Internet access.

Why do we need tough benchmarks? The first step of scalable oversight has human experts supervise AI outputs when the ground truth is unavailable. To test whether any paricular scalable oversight method works, ideally we want questions which humans can’t solve easily, but where we do have ground truth (to meta-test the scalable oversight method).5

Quips: they used only two expert and three non-expert testers per question, and filter the final dataset based on this testing (hence the accuracy is a bit biased). While we’re at that, the dataset is one order of magnitude too small for precise measurements.

To guess why: on 500 questions and $10/$30 per question, getting even one person per question to test it properly is about $10000. Making good benchmarks is expensive :)

A tiny collection of interesting links

Norbert Wiener on AI (1960):

Complete subservience and complete intelligence do not go together. How often in ancient times the clever Greek philosopher slave of a less intelligent Roman slaveholder must have dominated the actions of his master rather than obeyed his wishes!6

I think the existence of abstractions beyond current human knowledge is a very reasonable assumption, given the history of all scientific progress. However, incomprehensible internal representations are not strictly necessary for models to attain superhuman performance.

Human cognition does not operate close to the thermodynamic limits of computation, and more importantly, AI systems can scale to take much more than 400 kcal per day to convert into computation.

This means it's possible to build an AI that outperforms humans just on raw speed and compute power, without ever having to discover concepts far beyond human knowledge. I just don't think it's remotely likely that deep learning produces that kind of AI.

RASP-L is derived from RASP, but improves on it by enforcing uniform behavior accross different context lengths. Notable changes: it’s explicitly decoder-only, and arithmetic on indices is much more limited.

Given the number of people working on alternative architectures, maybe not for long?

Although, check out this criticism by nostalgebraist. Maybe generalizing from human feedback on absurdly complex tasks is a priori so doomed (every label is a coin flip) that it’s better to just make asking for generalization work.

Strong opinion, weakly held? I don’t see a reason why we’d want to bet on a scalable oversight method that we can’t actually test (and measure how often it gets us to ground truth). The paper agrees, but I’d be open to counterarguments.

I wonder, really, how often? The first sentence is a great soundbite, but it feels we can’t possibly have any data on this.