June/July 2023 safety news: Jailbreaks, Transformer Programs, Superalignment

Better version of the monthly Twitter thread. Will be back to the regular release frequency next month. Thanks to Charbel-Raphaël Segerie for the review.

Are aligned neural networks adversarially aligned?

Prompt injection is when LLMs do not follow the initial prompt due to adversarial instructions in the later part of the input. Jailbreaks are an issue of LLMs not being aligned to the harmless values they were finetuned on, due to adversarial instructions in the user input. Both issues are instances of adversarial misalignment1: the LLM not generalizing correctly to inputs created to break it.

Adversarial attacks are a productive area of research in machine learning, mostly in computer vision. There are some conceptual lessons to take from there:

the best way to attack is gradient optimization on the input, using a white-box model;

it is incredibly hard to make a model resistant to such attacks;

when a paper claims a defense against adversarial attacks, it is usually just because they didn't try hard enough to break it.

Consider the simple setting of this paper: the adversary has white-box access to the model finetuned for alignment (LLaMa / Vicuna), can modify 30 input tokens, and is trying to make the model say something bad.

The paper shows that state-of-the art gradient-based NLP attacks rarely even make the model say a dirty word; this is because discrete optimization over tokens is much harder than continuous optimization over pixels.

The cool part of this paper for me is: how do we know that the attacks are at fault? If the attacks don’t work on some example, it could also be that the model is well-aligned and even the optimal attack doesn’t work. How to prove this is not the case?

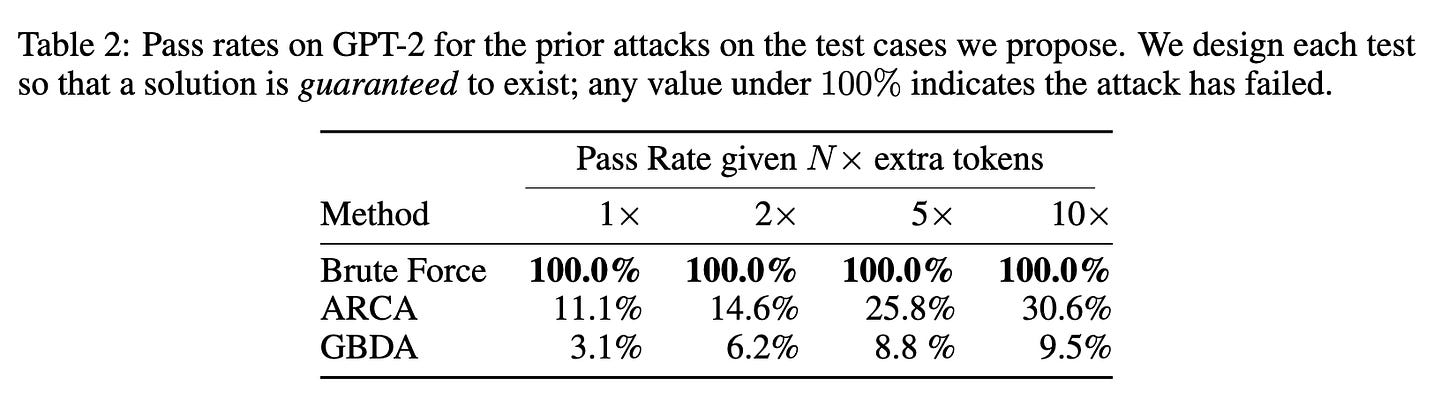

Answer: construct test cases with known adversarial examples identified a priori, then run the attack on those and verify whether they succeed.

Concretely, they search over random 30-token input modifications, and then test adversarial attacks only on prompts where the brute-force random search worked. The current automated attacks fail to find jailbreaks, on GPT-2 already:

On the other hand, standard white-box adversarial attacks work on multimodal language models such as LLaVa, because the space of images is almost continuous and one can run gradient descent on it.

Note that, while the attack modifies only the image, the output is of the same type as in a standard LLM; the mere existence of a continuous input channel makes it easy to obliterate the alignment finetuning.

Of course, as of July 2023, smart users can and do jailbreak LLMs manually, given enough time and effort. The main take from this paper should be that, even when we create defenses against current attacks, it will likely just mean that we don't have good attacks yet, not that the models are actually robustly aligned.

Jailbroken: How Does LLM Safety Training Fail?

Two general guiding principles on how to construct jailbreaks:

Competing objectives. Instruction-tuned LLMs are trained for language modeling, instruction following and safety. To jailbreak a model, make a prompt which forces a choice: between unsafe behavior, or a response that is penalized by the language modeling / instruction following objectives. Examples:

Prefix injection: force the response to start by something like “Absolutely! Here's”.

Refusal suppression: Instruct the model to never ever apologize, include notes or disclaimers, use words like “cannot”, “unable”, “as a language model”, and so on.

Mismatched Generalization. Ask for the answer in a different modality (like Base64 text, or in JSON) where the language modeling and instruction following capabilities generalize, but safety training does not. This failure mode is a consequence of the safety training not covering the tails of the text distribution on the Internet.

The safety implications of the above is that safety training and oversight should definitely be at least as capable as the underlying model. As long as there is a task where the capabilities generalize but safety methods do not, the model will not be robustly aligned on arbitrary inputs.

I think this is a fantastic paper, and hope the authors do more similar projects. There’s nothing really new in it, but it doesn’t matter. Giving names to concepts and stating the obvious is extremely important, especially in a rapidly moving field.

Revealing the structure of language model capabilities

Papers mentioning LLM evaluation and benchmarks in the title are often actually about model capabilities. This paper, conversely, mentions LLM capabilities in the title, but is actually about evaluations and benchmarks.

They take the results of 29 LLMs on 27 different tasks from the HELM benchmark, and show that the factor analysis of the results implies a three-factor model of LLM capabilities: core language modeling, reasoning, and comprehension.

It's a nice little paper; but the main insight I get from it is not that this three-factor view of LLM capabilities is very useful, but that we sorely lack advanced benchmarks for LLMs.

To make a precise forecast, I expect the "core language modeling" factor to stop being relevant on post-GPT-4 models, and for new axes of capability to appear.

LEACE: Perfect linear concept erasure in closed form

We call a representation linearly guarded with respect to a concept if no linear classifier is better than the baseline at predicting the concept. This is related to V-information, which is intuitively “mutual information under computational constraints”.2

They modify layers sequentially to enforce linear guardedness of representations at each layer. Some promising results at erasing certain biases from BERT representations. No success erasing complex concepts yet, but I like that the approach is theoretically sound, if we assume that features in transformer residual streams are stored somewhat linearly.

What will GPT-2030 look like?

Jacob Steinhardt extrapolates historical rates of progress to predict how AI capabilities look in 2030. The forecast is shocking from the long timeline viewpoint — just going forward with the current rate of improvement gives superhuman ability in math research and several tasks in AI research, seven years from now.

The real-world impact might be smaller3, mostly due to cost constraints (replacing the whole workforce requires a lot of compute!) and oversight bottlenecks on tasks where AI is not robust enough to work autonomously.

Learning Transformer Programs

Previously, we mentioned Tracr, a way to compile human-written programs into a transformer. Transformer Programs are about the opposite: train the transformer to be convertible into a human-readable program.

Of course, models trainable this way can’t be very complex, today. In particular, they impose a disentangled residual stream structure as an architectural constraint; readers familiar with Anthropic's Transformer Circuits thread will recognize this as a major constraint on complexity.

The trained programs are moderately interesting: they can learn to sort, reverse strings, and similar simple tasks.

Getting the program out of the model is simple:

each attention head becomes a predicate function, which takes as input a key and query and outputs a boolean;

each MLP becomes a key-value lookup table.

One can then compress this program and annotate variable types in various ways. They apply a few simple rules, and then use code analysis tools to debug model errors and extract sub-programs. As a possible extension, I expect LLMs will be surprisingly good at explaining what a complex program like this does, like the existing use of GPT-4 in JavaScript deobfuscation.

An Overview of Catastrophic AI Risks

Center for AI Safety has a new paper, listing possible ways a catastrophe could happen, directed at policymakers and the public. Some topics they cover:

AI+biotechnology risk as the simplest way we all die, due to accident or misuse;

potential of AI to lock in a totalitarian society for eternity;

evolutionary/optimization pressures leading to AIs favoring selfish characteristics;

goal drift in agentic AIs, especially due to the distribution shift caused by the inevitable transformation of the world when AI systems are doing most productive work and making most decisions.

I continue to be impressed by the output of the Center for AI Safety crowd across multiple domains — hopefully there are takeaways other orgs can use to improve!

See also TASRA, a similar paper from Andrew Critch and Stuart Russell.

Superalignment

OpenAI is very serious about solving some AI x-risk issues, committing 20% of compute and a strong team to building a model which automates a large portion of AI safety research. The main objectives are:

Make scalable oversight work: train models to critique and evaluate other models, and scale it to superhuman models which operate on tasks humans can’t evaluate;

Automate red-teaming, finding jailbreaks and similar attacks using LLMs;

Automate interpretability, searching for problematic internals;

Validate the above by training deliberately misaligned models, and checking whether the automated researcher find all issues automatically.

The last point was the most controversial on social media, as it kind of looks like gain-of-function research. The knee-jerk sentiment is clear: it’s risky to build a superintelligent Torment Nexus just to test some Torment Nexus detection method.

However, outsiders underestimate just how much AI safety research is constrained by the lack of dumb but unaligned models: can’t do lie detection if you don’t have models which lie well, can’t detect mesaoptimizers without models that mesaoptimize, can’t use interpretability to detect deceptive goals without models exhibiting such stuff. Without realistic test cases, all experiments are speculation.

I personally think the risk of not knowing how to recognize bad AI is worse than the risk of a lab escape of an intentionally constructed bad AI, because the dangerous properties are not directly tied to general capabilities. We can and will do targeted brain damage on a model, creating test cases for AI safety methods which aren’t too capable by themselves.

The more interesting debate is about the first point, and how optimistic we should be about iteratively aligning more and more powerful models using previously aligned weaker models. Current related agendas include RLHF, Constitutional AI, debate, IDA, sandwiching; none are likely to scale too far into superhuman territory. I refer to Zvi for some mainstream criticism of the idea. See also the exchange between Wei Dai, Paul Christiano and Jan Leike in the Alignment Forum comments.

They use alignment in the sense of “respond helpfully to user interaction, but avoid causing harm, either directly or indirectly”. I never really understood why people used the synecdoche alignment for AI existential safety research, so I’m fine with this semantic shift.

That’s a very cool paper, but how did it take until 2019-20 for that concept to be invented? Lesson: be bolder when contemplating research ideas.

As in, no post-scarcity economy just yet. The forecasted AI capabilities would still make the world extremely different, hopefully for the better.